Contributed works of Grigory Filatov

Adaptation FiveWin TsBrowse class

Color Table

Center Window’s Title

Closes application when no activity

Copy Protection (Get BIOS Name)

GetFonts

Get list of all controls

The Harbour implementation of codeblocks

Author : Ryszard Glab <rglab@imid.med.pl>

Compilation of a codeblock.

During compile time the codeblock is stored in the following form:

- the header

- the stream of pcode bytes

The header stores information about referenced local variables.

+0: the pcode byte for _PUSHBLOCK

+1: the number of bytes that defines a codeblock

+3: number of codeblock parameters (declared between || in a codeblock)

+5: number of used local variables declared in procedure/function where

the codeblock is created

+7: the list of procedure/function local variables positions on the eval

stack of procedure/function. Every local variable used in a codeblock

occupies 2 bytes in this list. When nested codeblocks are used then this

list is created for the outermost codeblock only.

+x: The stream of pcode bytes follows the header.

+y: the pcode byte for _ENDBLOCK

Creation of a codeblock.

When HB_P_PUSHBLOCK opcode is executed then the HB_ITEM structure is created

and placed on the eval stack. The type of item is IT_BLOCK. The value of this

item is a pointer to HB_CODEBLOCK structure. Additionally this item stores the

base of static variables defined for the current function/procedure - this

is used during a codeblock evaluation when the evaluation is called from a code

from other PRG module. Also the number of expected parameters is stored.

The HB_CODEBLOCK structure stores a pointer to the pcodes stream that is

executed during a codeblock evaluation. It stores also the pointer to a table

with local variables references. Values of all local variables defined in a

procedure and used in a codeblock are replaced with a reference to a

value stored in a global memory variables pool. This allows the correct access

to detached local variables in a codeblock returned from this function (either

directly in RETURN statement or indirectly by assigning it to a static or

memvar variable. This automatic and unconditional replace is required because

there is no safe method to find if a codeblock will be accessed from an outside

of a function where it is created.

When nested codeblocks are used then only the outermost codeblock creates

the table - all inner codeblock uses this table. The first element of this

table contains a reference counter for this table. It allows to share the table

between nested codeblock - the table is deleted if there is no more references

to it. This is caused by the fact that a inner codeblock can be created during

evaluation of outer codeblock when local variables don't exist like in this

example:

PROCEDUE Main()

PRIVATE foo, bar

Test()

Eval( foo )

Eval( bar )

PROCEDURE Test()

LOCAL a := "FOO", b := "BAR"

foo := {|| a + ( bar := Eval( {|| b } ) ) }

RETURN

Evaluation of a codeblock.

Parameters passed to a codeblock are placed on the eval stack before a

codeblock evaluation. They are accessed just like usual function

parameters. When a codeblock parameter is referenced then its position on the

eval stack is used. When a procedure local variable is referenced then the

index into the table of local variables positions (copied from the header) is

used. The negative value is used as an index to distinguish it from the

reference to a codeblock parameter.

Incompatbility with the Clipper.

1) Detached locals passed by reference

There is a little difference between the handling of variables passed by

the reference in a codeblock.

The following code explains it (thanks to David G. Holm)

PROCEDURE Main()

LOCAL nTest

LOCAL bBlock1 := MakeBlock()

LOCAL bBlock2 := {|| DoThing( @nTest ), QOut( "From Main: ", nTest ) }

Eval( bBlock1 )

Eval( bBlock2 )

RETURN

FUNCTION MakeBlock()

LOCAL nTest

RETURN {|| DoThing( @nTest ), QOut( "From MakeBlock: ", nTest ) }

FUNCTION DoThing( n )

n := 42

RETURN NIL

In Clipper it produces:

From MakeBlock: NIL

From Main: 42

In Harbour it produces (it is the correct output, IMHO)

From MakeBlock: 42

From Main: 42

2) Scope of undeclared variables

Consider the following code:

PROCEDURE Main()

LOCAL cb

cb := Detach()

? Eval( cb, 10 )

RETURN

FUNCTION Detach()

LOCAL b := {| x | x + a }

LOCAL a := 0

RETURN b

In Clipper the 'a' variable in a codeblock has the *local* scope however in

Harbour the 'a' variable has the *private* scope. As a result, in Clipper

this code will print 10 and in Harbour it will raise 'argument error' in

'+' operation.

This will be true also when the 'a' variable will be declared as PRIVATE

PROCEDURE Main()

LOCAL cb

PRIVATE a

cb := Detach()

? Eval( cb, 10 )

RETURN

The above code also prints 10 in Clipper (even if compiled with -a or -v

switches)

Source : https://github.com/harbour/core/blob/master/doc/codebloc.txt

Chapter 8

DBPX Driver Installation and Usage

DBPX is the Paradox 3.5 compatible RDD for Clipper. It connects to the low-level database management subsystem in the Clipper architecture. When you use the DBPX RDD, you add a number or features, including the ability to:

. Create access to and modify Paradox tables, records, and fields

. Create, select, and activate secondary indexes on Paradox tables

. Create and modify Paradox table structures, including primary index fields

. Use explicit record and file locks with concurrent execution of other Clipper applications

. Import Paradox tables directly into Clipper arrays

In This Chapter

This chapter explains how to install DBPX and how to use it in your applications. The following major topics are discussed:

. Overview of the DBPX RDD

. Installing DBPX Driver Files

. Linking the DBPX Driver

. Using the DBPX Driver

Overview of the DBPX RDD

The DBPX driver lets you create and maintain (.db), (.px), (.x??), and (.y??) files with features different from those supplied with the original DBFNTX driver and compatible with files created under Paradox 3.5. The new features are supplied in the form of several syntactical additions to database and indexing commands and functions. Specifically you can:

. Create tables that recognize the standard Clipper data types as well as Currency ($) and Short (S) numbers between -32,767 to +32,767

. Create equally efficient keyed and unkeyed tables

. Create, select, and activate secondary indexes on Paradox tables

The DBPX driver provides simple, seamless access to the Paradox database system. The Clipper application programmer who intends to access Paradox tables with the “VIA” clause need only include the RDD header file at compile time and make the appropriate libraries available at link time.

Paradox stores data in tables (known to Xbase developers as data files (.db)’s), consisting of fields and records. Unlike Xbase databases, a Paradox database refers to a group of files that are related to each other in some way, rather than to one file.

Also, Paradox employs the concept of companion files, known as objects, that are related to the table. Some examples of object files are report forms, indexes, and data entry forms. A table and its accompanying objects are referred to as a family.

It is easy to identify objects belonging to a particular family since they all have the same base filename and are distinguished by their extensions as shown in the table below.

Paradox File Descriptions

——————– —————————————————

Extension Object

.DB Table

.PX Primary Index .X?? or Y?? Secondary Index

.F or F?? Data Entry Forms

.R or R?? Report Formats

.G or G?? Graph Specifications

.SET Image Settings

.VAL Field Validity Specifications

------------ ------------------------------------------------------------

The DBPX driver only deals with the table and index files (.db, .px, .x?? and y??) so only these files are discussed here.

Though Paradox tables are limited to 8 character filenames, each table can contain an unlimited number of records in files up to 256M in size. Paradox records in nonkeyed tables can be up to 4000 bytes each while keyed tables have a 1350 byte limitation. Each record can contain up to 255 fields of up to 255 characters each.

There are some field naming restrictions you must observe. Field names may:

. Although the Paradox file structure allows fields to be up to 25 characters long, since Clipper symbols can only be 10 characters, DBPX truncates the field name to 10 characters.

. The Paradox file structure allows embedded spaces in field names. Since this is illegal in Clipper, the DBPX driver converts spaces into underscores (_).

. Not be duplicated in the same table.

Also, most Paradox data types directly match data types in standard Xbase data files, with these differences:

. Paradox tables support both the Numeric (N) data type as well as a more specific Currency ($) data type. Both the N and $ data types can have 15 significant digits. Numeric types that exceed this length are rounded and stored as scientific notation. Also, DBPX supports the Short (S) data type to represent numbers between -32,767 and +32,767.

. The Alphanumeric field type allows all ASCII characters except embedded nulls (ASCII 0). The Alphanumeric type is identical to the Character (C) data type in Xbase. Paradox limits this field type to 255 characters.

. Paradox also supports a Date (D) field type, stored as a long integer. It can contain any value between January 1, 100 A.D. and December 31, 9999.

Installing DBPX Driver Files

The DBPX RDD is supplied as the file, DBPX.LIB:

The Clipper installation program installs this driver in the \CLIPPER5\LIB subdirectory on the drive that you specify, so you need not install the driver manually.

Linking the DBPX Database Driver

To link the DBPX driver, you must specify DBPX.LIB to the linker along with your application object (.OBJ) modules.

1. To link with .RTLink using positional syntax:

C>RTLINK <appObjectList> ,,,DBPX

2. To link with .RTLink using freeformat syntax:

C>RTLINK FI <appObjectList> LIB DBPX

Note: These link commands all assume the LIB, OBJ, and PLL environment variables are set to the standard locations. They also assume that the Clipper programs were compiled without the /R option.

Using the DBPX Database Driver

To use Paradox files in a Clipper program:

1. Place REQUEST DBPX at the top of each program file (.prg) that opens a database file using the DBPX driver.

2. Specify the VIA “DBPX” clause if you open the database file with the USE command.

-OR-

3. Specify “DBPX” for the <cDriver> argument if you open the database file with the DBUSEAREA() function.

-OR-

4. Use RDDSETDEFAULT( “DBPX” ) to set the default driver to DBPX.

Except in the case of REQUEST, the RDD name must be a literal character string or a variable. In all cases it is important that the driver name be spelled correctly using uppercase letters.

The following program fragments illustrate:

REQUEST DBPX . . . USE Customers INDEX Name, Address NEW VIA "DBPX"

-OR- REQUEST DBPX RDDSETDEFAULT( "DBPX" ) . . . . USE Customers INDEX Name, Address NEW

Index Management

The greatest variation from the standard Xbase database design in Paradox tables is index management. As in other systems, Paradox indexes are an efficient method of dynamically sorting or locating specific data within a table without forcing a search of all data in that table. Paradox tables take two forms: unkeyed and keyed.

An unkeyed table has no fields in its structure that have been identified as specific index keys. Therefore, records are maintained in natural order. New records are added to the end of an existing table, and the unique identity for each record is a record number.

Unlike Xbase data files, unkeyed tables are not more efficient in design or faster to traverse than keyed tables. This is because Paradox tables are built as linked lists rather than fixed-length, sequential tables. Therefore, it is actually less efficient to SKIP through a unkeyed table than it is through a keyed table.

A keyed table, on the other hand, can be lightning fast as long as the data you seek is part of the key. Otherwise, just as in an unkeyed table, you are forced to do a sequential search through the table’s data fields.

Paradox tables support two types of keys or indexes.

. Primary

. Secondary

Primary Indexes

Primary indexes are directly tied to keyed tables because a primary index indicates the table is keyed. Simply, it is impossible to have a keyed table without a primary index. If you remove the primary index from a keyed table it becomes an unkeyed table.

When you identify one or more of the table’s fields as a key field (by placing an asterisk (*) at the end of the field name) during table creation/restructuring, these fields are used to create a primary index. (Note that all key fields must be together as the first fields in a table). This invisibly rebuilds the table’s structure, though in operation it only seems to change or create the key index.

Once you identify this primary key, the table is automatically maintained in the key field order and all new records are checked to make sure that no duplicate keys are added to the table. This type of index is called a unique key index. You may have only one primary key per table, but this key can be a composite of many fields in the table. You may only modify by restructuring the table.

If it is necessary to change a primary key and restructure a table, all data in the table will still be bound to the unique key restriction. This is important if you change the primary key by adding a new field to it and there is already data in the table where this new composite key would have duplicates.

DBPX handles this situation by generating a runtime error and removing every record that violates this unique key and moving it to another table named KEYVIOL.db which has the identical structure of the offending table.

The KEYVIOL.db is automatically generated whenever this situation occurs. If there is already a KEYVIOL table, it is overwritten. Because of this you should always check for the existence of a KEYVIOL.db table immediately after any type of table restructuring.

Secondary Indexes

Secondary indexes are more like common Xbase-type indexes because they can be generated or modified on the fly without having any effect on the data or table structure and aren’t restricted to unique key data.

Unlike Xbase indexes, secondary indexes can only contain a single field as their key. As mentioned earlier, primary indexes are automatically maintained so that they are always up to date. Secondary indexes are created in two different types.

. Incremental (for keyed tables)

. Independent (for unkeyed tables)

Independent indexes are created only for unkeyed tables and are not dynamically maintained in any way. Because of this they can only be considered accurate at the time of their creation. If data changes inside the table that affects the index, the index must be completely regenerated before it can be considered useful again.

Alternately, incremental indexes are created only for keyed tables and are automatically maintained similarly to primary indexes except that instead of a complete rebuild at every change, only the portion of the index affected is updated. Incremental indexes are preferable when you are handling large tables since they take considerably less time and energy to keep accurate.

Temporary Indexes

ALL, NEXT, RECORD, and REST are all supported in the scoping expressions. The syntax of these keywords is identical to that used in Clipper. Note that you can only use one scope keyword at a time. If more than one of these keywords is encountered in a scoping expression, then the last keyword in the expression is the option used.

The ALL keyword (default) specifies that all records in the table should be included in the operation, starting at the first record.

NEXT processes the specified number of records, starting with the current record. For example, NEXT 5 would process the current record and the four records following it.

The RECORD keyword identifies a specific record to process. The desired record number should follow the keyword RECORD. To process record number 3, you would include “RECORD 3” in the expression.

The REST keyword causes processing to begin with the current record, instead of starting at the beginning of the table.

Sorting

In the event that you want to reorder a table based on field data but don’t need or want to have an index attached to it, you have the option of sorting the table based on the current index. This entails a simple copy from a keyed table to an unkeyed table using the table sort function.

Passwords and Security

Although the Paradox DBMS cannot be considered a data dictionary system, it does have some special characteristics that make it more suitable to networks than the standard Xbase tables. One of these features is the level of security available.

There are two methods to make sure that your data is secure: master passwords and auxiliary passwords. As the owner of a table, you can limit access by attaching a master password to it. Auxiliary passwords can also be identified to establish access to the table and its family.

Once any type of password is identified for a table, its is encrypted. This protects it not only from unauthorized Paradox users but also from anyone trying to dissect it at the DOS file level. The encryption method used by Paradox is literally unbreakable and if you (or your users) forget a table password, there is no way to recover that information.

Auxiliary passwords allow access control at the table and field levels. Access to tables can be restricted to:

. ReadOnly: No changes to the table can be made

. Update: Changes to nonkey fields are allowed, no records can be added or deleted

. Entry: Same as update except that new records can be added

. InsertDelete: Same as Entry except that records can be inserted and deleted

. All: Full access including restructuring and table deletion

Access to the fields can be identified as:

. None: This field data cannot be displayed to the user

. ReadOnly: User can see the field value, but cannot change it

. All: Full access

With DBPX, you may perform basic database operations on Paradox tables without code changes.

Note that because Paradox tables can have primary indexes which are actually part of the table structure specification, when you open a Paradox table, its associated primary index (if applicable) is also opened and activated. The only exception to this rule is if you indicate that you want a secondary index to be activated at the time you open the table. If no primary index is available and no secondary index is specified, the table is opened in natural sequence order.

You can have up to twenty-four Paradox tables open simultaneously. These may be separate tables or the same table repeatedly or any variation in between. This might be important if you want to have more than one secondary index active for a single table, allowing you to move from one work area to another with the only change being the index order of the data in the table. Be careful with this type of multiviewed approach, however, since you will be eating up memory for each work area, despite the fact that they refer to the same table.

Sharing Data in Networks

The DBPX driver supports the native Clipper single-lock locking scheme. Therefore, in a shared environment, your application and Paradox will not see each other’s record locks. This may result in some concurrency corruption and errors.

In a shared environment, DBPX performs no record buffering; immediately writing all changes to disk.

Concurrency is an issue whenever your application is running either on a network or in some other shared environment. One example of a non- network shared environment is when your application is called from another program (like Paradox, Quatro Pro, etc.) that also has access to the Paradox tables. Even if you don’t have any plans to use your program on a network, you should design it to be smart enough not to become a problem if faced with this type of shared example.

Also be aware that many networks have different rights and privilege restrictions and you should know what they are and how to handle them.

Using (.px) and (.ntx) Files Concurrently

You can use both (.px), as well as (.x), (.y) and (.ntx) files concurrently in a Clipper program like this:

REQUEST DBPX

// (.ntx) file using default DBFNTX driver

USE File1 INDEX File1 NEW

// (.idx) files using DBPX driver

USE File2 VIA "DBPX" INDEX File2 NEW

Note, however, that you cannot use (.px) and (.ntx) files in the same work area. For example, the following does not work:

USE File1 VIA "DBFNTX" INDEX File1.ntx, File2.px

Summary

In this chapter, you were given an overview of the features and benefits of the DBPX RDD. You also learned how to link this driver and how to use it in your applications.

Consider a table for customers records with two character fields : Customer ID and customer name:

| Cust_ID | Cust_Name |

| CC001 | Pierce Firth |

| CC002 | Stellan Taylor |

| CC003 | Chris Cherry |

| CC004 | Amanda Baranski |

It’s known all possible and necessary operations on a table: APPEND, DELETE, SEEK and so on; by the way, for SEEK we need an index file also.

Listing this table is quite simple:

USE CUSTOMER

WHILE .NOT. EOF()

? CUST_ID, CUST_NAME

DBSKIP()

ENDDO

If our table is sufficiently small, we can find a customer record without index and SEEK :

LOCATE FOR CUST_ID = “CC003”

? CUST_ID, CUST_NAME

If we want all our data will stand in memory and we could manage it more simple and quick way, we would use an array ( with some considerations about size of table; if it is too big, this method will be problematic ) :

aCustomer := {} // Declare / define an empty array

USE CUSTOMER

WHILE .NOT. EOF()

AADD(aCustomer, { CUST_ID, CUST_NAME } )

DBSKIP()

ENDDO

Traversing this array is quite simple :

FOR nRecord := 1 TO LEN( aCustomer )

? aCustomer[ nRecord, 1 ], aCustomer[ nRecord, 2 ]

NEXT

or :

a1Record := {}

FOR EACH a1Record IN aCustomer

? a1Record[ 1 ], a1Record[ 2 ]

NEXT

And locating a specific record too:

nRecord := ASCAN( aCustomer, { | a1Record | a1Record[ 1 ] == “CC003” } )

? aCustomer[ nRecord, 1 ], aCustomer[ nRecord, 2 ]

A lot of array functions are ready to use for maintain this array : ADEL(), AADD(), AINS() etc …

Now, let’s see how we could use a hash for achieve this job :

hCustomer := { => } // Declare / define an empty hash

USE CUSTOMER

WHILE .NOT. EOF()

hCustomer[ CUST_ID ] := CUST_NAME

DBSKIP()

ENDDO

Let’s traversing :

h1Record := NIL

FOR EACH h1Record IN hCustomer

? h1Record: __ENUMKEY(),h1Record:__ENUMVALUE()

NEXT

Now, we have a bit complicate our job; a few field addition to the table :

| No: | Field Name | Type | Width | Dec | Decription |

|

1 |

CUST_ID |

C |

5 |

0 |

Id ( Code ) |

|

2 |

CUST_NAME |

C |

10 |

0 |

Name |

|

3 |

CUST_SNAM |

C |

10 |

0 |

Surname |

|

4 |

CUST_FDAT |

D |

8 |

0 |

First date |

|

5 |

CUST_ACTV |

L |

1 |

0 |

Is active ? |

|

6 |

CUST_BLNCE |

N |

11 |

2 |

Balance |

While <key> part of an element of a hash may be C / D / N / L type; <xValue> part of hash too may be ANY type of data, exactly same as arrays.

So, we can make fields values other than first ( ID) elements of an array:

hCustomer := { => } // Declare / define an empty hash

USE CUSTOMER

WHILE .NOT. EOF()

a1Data:= { CUST_NAME, CUST_SNAM, CUST_FDAT, CUST_ACTV, CUST_BLNCE }

hCustomer[ CUST_ID ] := a1Data

DBSKIP()

ENDDO

Let’s traversing :

h1Record := NIL

FOR EACH h1Record IN hCustomer

a1Key := h1Record:__ENUMKEY()

a1Data := h1Record:__ENUMVALUE()

? a1Key

AEVAL( a1Data, { | x1 | QQOUT( x1 ) } )

NEXT

*-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._

/*

Hash vs Tables

*/

#define NTrim( n ) LTRIM( STR( n ) )

#define cLMarj SPACE( 3 )

PROCEDURE Main()

SET DATE GERM

SET CENT ON

SET COLO TO "W/B"

SetMode( 40, 120 )

CLS

hCustomers := { => } // Declare / define an empty PRIVATE hash

IF MakUseTable()

Table2Hash()

* Here the hash hCustomers may be altered in any way

ZAP

Hash2Table()

ELSE

? "Couldn't make / USE table"

ENDIF

?

@ MAXROW(), 0

WAIT "EOF HashVsTable.prg"

RETURN // HashVsTable.Main()

*-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.

PROCEDURE Table2Hash()

hCustomers := { => }

WHILE .NOT. EOF()

hCustomers[ CUST_ID ] := CUST_SNAM

DBSKIP()

ENDDO

ListHash( hCustomers, "A hash transferred from a table (single value)" )

hCustomers := { => } // Declare / define an empty hash

DBGOTOP()

WHILE .NOT. EOF()

hCustomers[ CUST_ID ] := { CUST_NAME, CUST_SNAM, CUST_FDAT, CUST_ACTV, CUST_BLNCE }

DBSKIP()

ENDDO

ListHash( hCustomers, "A hash transferred from a table (multiple values)" )

RETURN // Table2Hash()

*-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.

PROCEDURE Hash2Table()

LOCAL h1Record,;

c1Key,;

a1Record,;

n1Field

FOR EACH h1Record IN hCustomers

c1Key := h1Record:__ENUMKEY()

a1Record := h1Record:__ENUMVALUE()

DBAPPEND()

FIELDPUT( 1, c1Key )

AEVAL( a1Record, { | x1, n1 | FIELDPUT( n1 + 1 , x1 ) } )

NEXT h1Record

DBGOTOP()

?

? "Data trasferred from hash to table :"

?

WHILE ! EOF()

? STR( RECN(), 5), ''

FOR n1Field := 1 TO FCOUNT()

?? FIELDGET( n1Field ), ''

NEXT n1Field

DBSKIP()

ENDDO

RETURN // Hash2Table()

*-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.

PROCEDURE ListHash( hHash, cComment )

LOCAL x1Pair

cComment := IF( HB_ISNIL( cComment ), '', cComment )

?

? cComment // , "-- Type :", VALTYPE( hHash ), "size:", LEN( hHash )

?

IF HB_ISHASH( hHash )

FOR EACH x1Pair IN hHash

nIndex := x1Pair:__ENUMINDEX()

x1Key := x1Pair:__ENUMKEY()

x1Value := x1Pair:__ENUMVALUE()

? cLMarj, NTrim( nIndex )

* ?? '', VALTYPE( x1Pair )

?? '', x1Key, "=>"

* ?? '', VALTYPE( x1Key )

* ?? VALTYPE( x1Value )

IF HB_ISARRAY( x1Value )

AEVAL( x1Value, { | x1 | QQOUT( '', x1 ) } )

ELSE

?? '', x1Value

ENDIF

NEXT

ELSE

? "Data type error; Expected hash, came", VALTYPE( hHash )

ENDIF HB_ISHASH( hHash )

RETURN // ListHash()

*-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.

FUNCTION MakUseTable() // Make / USE table

LOCAL cTablName := "CUSTOMER.DBF"

LOCAL lRetval, aStru, aData, a1Record

IF FILE( cTablName )

USE (cTablName)

ELSE

aStru := { { "CUST_ID", "C", 5, 0 },;

{ "CUST_NAME", "C", 10, 0 },;

{ "CUST_SNAM", "C", 10, 0 },;

{ "CUST_FDAT", "D", 8, 0 },;

{ "CUST_ACTV", "L", 1, 0 },;

{ "CUST_BLNCE", "N", 11, 2 } }

*

* 5-th parameter of DBCREATE() is alias -

* if not given then WA is open without alias

* ^^^^^^^^^^^^^

DBCREATE( cTablName, aStru, , .F., "CUSTOMER" )

aData := { { "CC001", "Pierce", "Firth", 0d20120131, .T., 150.00 },;

{ "CC002", "Stellan", "Taylor", 0d20050505, .T., 0.15 },;

{ "CC003", "Chris", "Cherry", 0d19950302, .F., 0 },;

{ "CC004", "Amanda", "Baranski", 0d20011112, .T., 12345.00 } }

FOR EACH a1Record IN aData

CUSTOMER->(DBAPPEND())

AEVAL( a1Record, { | x1, nI1 | FIELDPUT( nI1, X1 ) } )

NEXT a1Record

DBGOTOP()

ENDIF

lRetval := ( ALIAS() == "CUSTOMER" )

RETURN lRetval // MakUseTable()

*-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._.-._

Databases (PDF)

Author : G.C. Reddy

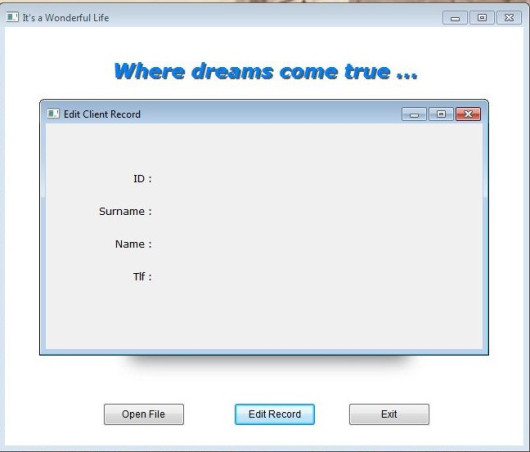

We are continuing with Viva_HMG.hbp, Main.prg and Main.fmg. We have assign real actions other than MsgBox() to our two buttons now : Open File and Edit Record. Open File not required GUI controls ( at least for now ), so we can begin with it: For Open File we need a file ( a table ) first: it’s here; a table with four field: Clients.dbf :

No: Field Name Type Width Dec --- --------- ---- ----- --- 1 CLI_ID N 5 0 2 CLI_SNAM C 12 0 3 CLI_NAME C 12 0 4 CLI_TLF C 11 0

And then add a little routine to Main.prg for open (USE) it:

PROCEDURE OpenTable()

IF FILE( "CLIENTS.DBF" )

USE CLIENTS

ELSE

MsgStop( "Clients.dbf file not found !")

ENDIF

RETURN // OpenTable()

And assign this procedure to ACTION of Open File button.

Now, we can begin Edit Record task. For this task we need a separate form, a sub form. Then let’s begin. “New form” from tool-bar and assign a name : EditReco. Assign a title : “Edit Record”, a type : MODAL. Our table has four fields, so we need four LABEL first:

Names : lblCLI_ID, lblCLI_SNAM, lblCLI_NAME, lblCLI_TLF;

Values ( Captions ) : ID, Surname, Name, Tlf

Rows : 60, 100, 140, 180 Col : 60

Cols : 60, 60, 60, 60

Widths : 70, 70, 70, 70

Alignement : RIGHT, RIGHT, RIGHT, RIGHT

We can see our job at work:

Now we need a place for display the current data and accept user input. The control for this purpose is text box. So we need to define four text boxes for each field in the table.

The button of text box in the IDE tool bar is :

Names : txbCLI_ID, txbCLI_SNAM, txbCLI_NAME, txbCLI_TLF;

Rows : 55, 95, 135, 175

Col : 140

DataTypes : First : NUMERIC, others : CHARACTER

We can see our job at work:

Well …

But where are table data ?

To see table data we need assign field values to text boxes as values.

Again, a little procedure:

PROCEDURE ReadData() EditReco.txbCLI_ID.Value := CLIENTS->CLI_ID EditReco.txbCLI_SNAM.Value := CLIENTS->CLI_SNAM EditReco.txbCLI_NAME.Value := CLIENTS->CLI_NAME EditReco.txbCLI_TLF.Value := CLIENTS->CLI_TLF RETURN // ReadData()

and a call command for this procedure to ON INIT event of EditReco form.

The result :

Everything is OK ?

No !

This is only first record of table; how we will see others ?

Yes, we need now yet another feature: navigation; that is travelling between records of table.

But before navigation, we have a problem: Open Table must be processed before Edit Record.

Otherwise a run time error will occurs: Alias does not exist.

What we can do?

– Discard Open Table button, open the table automatically; at beginning of program or at beginning of editing.

– Before editing, check the table, if doesn’t open,

– a) open automatically or

– b) warn user and don’t load Edit Table form.

Probably most convenient is : disable Edit Record button until table is open.

First a mini procedure :

PROCEDURE Initialize()

Main.btnEditRec.Enabled := .F.

RETURN // Initialize()

And then add this procedure ON INIT event of form main:

Last point: enable it after USE table:

PROCEDURE OpenTable()

IF FILE( "CLIENTS.DBF" )

USE CLIENTS

Main.btnEditRec.Enabled := .T.

ELSE

MsgStop( "Clients.dbf file not found !")

ENDIF

RETURN // OpenTable()

Run and see:

Before Open File :

After Open File:

Now we can pass to navigation:

We need seven buttons: Go Top, Go Next, Go Previous, Go Last, Save, Discard, Exit.

Name: btnGoTop, Caption : Top, Col : 50, Row: 220, Height: 28, Width: 60

Name: btnGoNext, Caption : Next, Col : 130, Row: 220, Height: 28, Width: 60

Name: btnPrevious, Caption : Previous, Col : 200, Row: 220, Height: 28, Width: 60

Name: btnGoLast, Caption : Last, Col : 270, Row: 220, Height: 28, Width: 60

Name: btnSave Caption : Save, Col : 380, Row: 60, Height: 28, Width: 100

Name: btnDiscard, Caption : Discard, Col : 380, Row: 140, Height: 28, Width: 100

Name: btnExit, Caption : Exit, Col : 380, Row: 220, Height: 28, Width: 100

Common: Font Name: Tahoma, Font Size: 9

Actions :

btnGoTop: ( DBGOTOP(), ReadData() )

btnGoNext: ( DBSKIP(), ReadData() )

btnPrevious: ( DBSKIP( -1 ), ReadData() )

btnGoLast: ( DBGOBOTTOM(), ReadData() )

btnSave: SaveData()

btnDiscard: ReadData()

btnExit: ThisWindow.Release

Note that actions of four first buttons include two actions, separated by comma and enclosed by parenthesis. With this notation we can define more than one action together.

SaveData() is the inverse of ReadData(): copy values of text boxes to table fields.

PROCEDURE SaveData() // Save data from text boxes to table

CLIENTS->CLI_ID := EditReco.txbCLI_ID.Value

CLIENTS->CLI_SNAM := EditReco.txbCLI_SNAM.Value

CLIENTS->CLI_NAME := EditReco.txbCLI_NAME.Value

CLIENTS->CLI_TLF := EditReco.txbCLI_TLF.Value

RETURN // SaveData()

Discard is simply re-reading data from table.

The result:

To be continued …

Alias :

The name of a work area; an alternate name given to a database file. Aliases are often used to give database files descriptive names and are assigned when the database file is opened. If no alias is specified when the database file is USEd, the name of the database file becomes the alias.

An alias can be used to reference both fields and expressions (including user-defined functions). In order to alias an expression, the expression must be enclosed in parentheses.

See also : Work Area

Attribute :

As a formal DBMS term, refers to a column or field in a table or database file.

See Also: Column, Field

Beginning of File :

The top of the database file. In Clipper language there is no beginning of file area or record. Instead, it is indicated by BOF() returning true (.T.) if an attempt is made to move the record pointer above the first record in the database file or the database file is empty.

Cell :

In a table, a cell is the intersection of a Row and a Column.

Column :

A database term used to describe a field in a table or database file.

See Also: Field

Concurrency :

The degree to which data can be accessed by more than one user at the same time.

Condition :

A logical expression that determines whether an operation will take place. With database commands, a logical expression that determines what records are included in an operation. Conditions are specified as arguments of the FOR or WHILE clause.

See Also: Scope

Controlling/Master Index :

The index currently being used to refer to records by key value or sequential record movement commands.

See Also: Index, Natural Order

Database :

An aggregation of related operational data used by an application system. A database can contain one or more data files or tables.

See Also: Field, Record, Tuple, View

DBMS :

An acronym for the term database management system. A DBMS is a software system that mediates access to a database through a data manipulation language.

Delimited File :

A text file that contains variable-length database records with each record separated by a carriage return/linefeed pair (CHR(13) + CHR(10)) and terminated with an end of file mark (CHR(26)). Each field within a delimited file is variable length, not padded with either leading or trailing spaces, and separated by a comma. Character strings are optionally delimited to allow for embedded commas.

End of File :

The bottom of a database file. In Clipper language, this is LASTREC() + 1 and is indicated by EOF() returning true (.T.).

Field :

The basic column unit of a database file. A field has four attributes: name, type, length, and decimals if the type is numeric.

See Also: Database, Record, Tuple, Vector, View

Field Variable :

A variable that refers to data in a database field, as opposed to data in memory.

See Also: Local Variable, Memory Variable, Variable

Index :

An ordered set of key values that provides a logical ordering of the records in an associated database file. Each key in an index is associated with a particular record in the database file. The records can be processed sequentially in key order, and any record can be located by performing a SEEK operation with the associated key value.

See Also: Controlling/Master Index, Key Value, Natural Order

Join :

An operation that takes two tables as operands and produces one table as a result. It is, in fact, a combination of other operations including selection and projection.

See Also: Projection, Selection

Key Expression :

An expression, typically based on one or more database fields, that when evaluated, yields a key value for a database record. Key expressions are most often used to create indexes or for summarization operations.

See Also: Index, Key Value

Key Value :

The value produced by evaluating a key expression. When placed in an index, a key value identifies the logical position of the associated record in its database file.

See Also: Index, Key Expression

Master Index :

The index currently being used to refer to records by key value or sequential record movement commands.

See Also : Controlling/Master Index

Memo Type :

A special database field type consisting of one or more characters in the extended character set. The maximum size of a memo field In Clipper language is 65,534 bytes. A memo field differs only from a character string by the fact it is stored in a separate memo (.DBT file) and the field length is variable-length.

See Also: Character String

Natural Order :

For a database file, the order determined by the sequence in which records were originally entered into the file. Also called unindexed order.

See Also: Index

Normalization :

The process of elimination and consolidation of redundant data elements in a database system.

Projection :

A DBMS term specifying a subset of fields. In Clipper, the analogy is the FIELDS clause.

See Also: Join Selection

Query :

A request for information to be retrieved from a database. Alternately, a data structure in which such a request is encoded.

Record :

The basic row unit of a database file consisting of one or more field elements.

See Also: Database, Field, Table, Tuple

Relation :

A link between database files that allows the record pointer to move in more than one database file based on the value of a common field or expression. This allows information to be accessed from more than one database file at a time.

Relational Database System :

A system that stores data in rows and columns, without system dependencies within the data. In other words, relationships between different databases are not stored in the actual database itself, as is the case in a system that uses record pointers.

Row :

A group of related column or field values that are treated as a single entity. It is the same as a Clipper language record.

See Also: Column, Field, Record

Search Condition :

See : Condition, Scope

Scope :

In a database command, a clause that specifies a range of database records to be addressed by the command. The scope clause uses the qualifiers ALL, NEXT, RECORD, and REST to define the record scope.

See Also: Condition

SDF File :

A text file that contains fixed-length database records with each record separated by a carriage return/linefeed pair (CHR(13) + CHR(10)) and terminated with an end of file mark (CHR(26)). Each field within an SDF file is fixed-length with character strings padded with trailing spaces and numeric values padded with leading spaces. There are no field separators.

See Also: Database, Delimited File, Text File

Selection :

A DBMS term that specifies a subset of records meeting a condition. The selection itself is obtained with a selection operator. In Clipper language, the analogy is the FOR clause.

Separator :

The character or set of characters that differentiate fields or records from one another. In Clipper language, the DELIMITED and SDF file types have separators. The DELIMITED file uses a comma as the field separator and a carriage return/linefeed pair as the record separator. The SDF file type has no field separator, but also uses a carriage return/linefeed pair as the record separator.

See Also: Delimiter

Sort Order :

Describes the various ways database files and arrays are ordered.

. Ascending

Causes the order of data in a sort to be from lowest value to highest value.

. Descending

Causes the order of data in a sort to be from highest value to lowest value.

. Chronological

Causes data in a sort to be ordered based on a date value, from earliest to most recent.

. ASCII

Causes data in a sort to be ordered according to the ASCII Code values of the data to be sorted.

. Dictionary

The data in a sort is ordered in the way it would appear if the items sorted were entries in a dictionary of the English language.

. Collating Sequence

Data in a sort will be placed in sequence following the order of characters in the Extended Character Set.

. Natural

The order in which data was entered into the database.

Table :

A DBMS term defining a collection of column definitions and row values. In Clipper, it is represented and referred to as a database file.

Tuple :

A formal DBMS term that refers to a row in a table or a record in a database file. In DIF files, tuple also refers to the equivalent of a table record.

See Also: Database, Field, Record

Update :

The process of changing the value of fields in one or more records. Database fields are updated by various commands and the assignment operator.

Vector :

In a DIF file, vector refers to the equivalent of a table field.

See Also: Database, Field, Record, Tuple

View :

A DBMS term that defines a virtual table. A virtual table does not actually exist but is derived from existing tables and maintained as a definition. The definition in turn is maintained in a separate file or as an entry in a system dictionary file. In Clipper, views are supported only by DBU.EXE and are maintained in (.vew) files.

See Also: Database, Field, Record

Work Area :

The basic containment area of a database file and its associated indexes. Work areas can be referred to by alias name, number, or a letter designator.

See Also: Alias